- Thoughts by Humans

- Posts

- Thought #21 - Invisible Infrastructure is Expensive

Thought #21 - Invisible Infrastructure is Expensive

AI is becoming part of the system - but not always in ways we notice

Hi lovely humans,

Some of this week’s biggest AI moves aren’t flashy features, but shifts in infrastructure, funding and expectations. Meta and Trump each are pledging billions, Google’s summaries are reshaping traffic, and the UK is recruiting engineers to build public sector models. Meanwhile, smaller updates from Claude and Perplexity continue to nudge AI into daily tools. And in the background, we’re seeing new questions about where transparency and trust fit in.

Our Week in AI

Claude Can Code, The Internet Can’t

I’m aware my current career is very AI-based - and it’s probably easy to assume I’m a big AI fan. But I’m not actually. I think the tech has real limitations, and that (being on brand here) education on how to use these tools well is essential. Both to avoid overwhelm, and more importantly, to avoid dangerous or sloppy use.

Which brings me to “vibe coding” - a term that genuinely gives me the ick.

I’m a big fan of using Claude for coding help. But I’m also noticing it slows me down sometimes when I use it for something advanced or complex.

There’s a pattern here worth calling out: Claude can’t write good React code - because most people can’t.

I’ve eased off using Claude for coding as much as I was. I recently did a platform refactor and properly enjoyed it. Now, I tend to use Claude more like I’d use StackOverflow - when I’m stuck or want to check a pattern.

One thing I finally figured out: Claude keeps introducing a weird re-rendering issue in our platform. It causes screen flickers or database writes 70 times over. Thankfully, it’s only ever been in dev branches so far.

But the root of the issue? Claude is trained on “the internet”. Which means there’s no minimal viable quality check on what it’s picked up. And React is often used badly - with overused useEffects, poor understanding of data fetching, and general performance traps (if you know you know, if not, just know it can make a site look fine but run badly). That’s the common baseline Claude is learning from.

This didn’t use to be a problem. I’d give Claude my whole codebase (which is relatively clean) and it would mostly replicate from that. But when I started asking for faster help without context - trying to speed things up - I ended up slowing myself down.

What is helping now: adding a prompt like:

Don’t overuse useEffect, check for anti-patterns, ensure code simplicity, and use React’s official documentation where possible.

Small reminder, yet again, that these tools are only as good as the habits we encourage.

AI New Releases

Claude launches financial tools and connectors

Claude has released new features aimed at financial services teams - including tools for summarising research and generating client content - plus a directory of connectors to integrate Claude into existing workflows.

Mistral’s new voice model: Voxtral

Mistral has launched an open-source voice model, Voxtral. Early examples are decent - but still sound AI-ish.

Perplexity launches ‘Comet’ browser

A new AI-powered browser designed to ‘get to know you personally’. Currently in closed beta - more of a concept launch than a tool most of us can use yet.

Microsoft Copilot update

A small update with big implications - Copilot can now see your whole screen (not just your prompt). Still in testing, but worth keeping an eye on how this gets used (and checking your settings).

AI News

Meta to invest ‘hundreds of billions’ in AI infrastructure

Zuckerberg has confirmed plans for vast new data centres - and a focus on superintelligence.

Trump pledges $70bn for AI + energy

The former president is meeting with tech and energy executives, with a focus on making the US a leader in AI and energy. More talk than detail, but a big number all the same.

Turing Institute calls for AI secondments

A new UK programme is offering secondments for technologists to work on open-source models for public services. Applications open now.

Google’s AI summaries hurting publishers

As Google adds more AI-generated summaries to Discover, publishers report further drops in traffic. Raises familiar questions about incentives, visibility, and where content value lands.

Anthropic signs US defence contract

A sign of how quickly AI companies are entering national security spaces. This is part of a push for ‘responsible AI’ in defence - though details are thin.

OpenAI prepares for advanced bio-AI use

A research update from OpenAI on how they’re preparing for future uses of AI in biology. Not a product release, but interesting to see the direction of travel.

Meta’s Windsurf deal falls through - Google steps in

Meta were in talks to acquire AI coding company Windsurf - but it didn’t go through. Now the team (and some IP) are at Google.

WeTransfer clarifies its terms

An update to WeTransfer’s T&Cs caused panic that your files might be used to train AI. They’ve now confirmed: they’re not. But it’s another reminder of how on edge people are.

Not Quite News, But Worth a Read (or Listen or Watch)

Who trained the band?

An AI-generated band hit over 1 million listens on Spotify before anyone noticed. It’s sparked new questions about whether we should be told when something is AI made - or just judge it on its own merits.Graduates are worried about AI and work

A Guardian piece on the anxieties of new grads entering an AI-shifting job market. Not news, but good context.

LinkedIn AI Poll

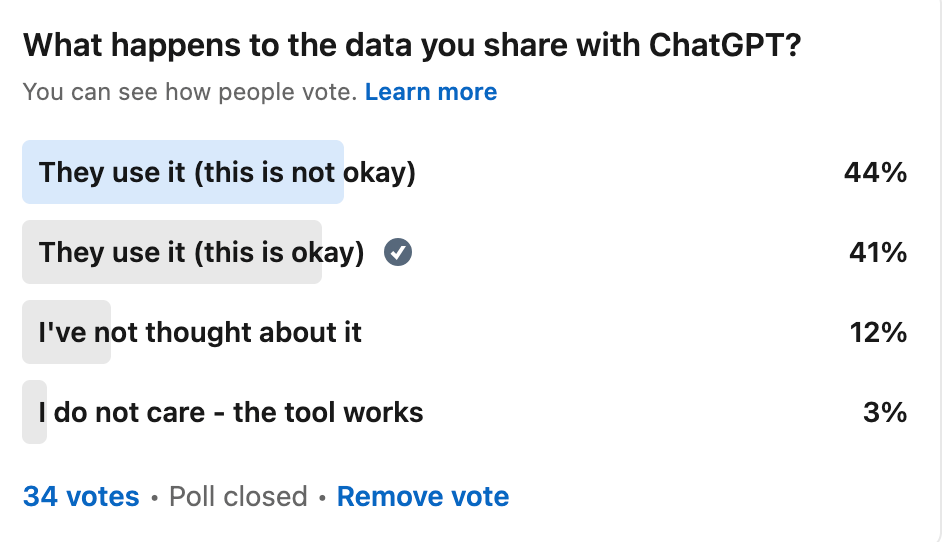

Last week we asked about how AI models using our data (this is one in a series I want to understand).

I’m surprised that 44% of people think OpenAI use your data, and that’s not okay. I’ll explain why I’m surprised - I think all companies are using our data for their own gains (especially if we aren’t paying for products if you’re not paying you’re the product). OpenAI actually are quite upfront about the data use, and give options to minimise it. So I would love to understand why people are holding them to a higher standard than say Google or Zoom?

Vote in this week’s poll - please!

This week we want to know if you’re using voice when you interact with AI tools.

Final Thoughts

As always we hope this was helpful!

Feel free to share this with anyone who might find it useful.

Next week, my prediction (and wish) is that AI takes a summer holiday.

Laura

Always learning