- Thoughts by Humans

- Posts

- Thought #23 - Regulation, Reliability, and a Bit of Vibe Coding

Thought #23 - Regulation, Reliability, and a Bit of Vibe Coding

A slower week for AI news, but one that showed where the real shifts are happening - in policy, usage limits, and how we actually build.

Hi lovely humans,

Before I jinx next week by saying this out loud - it’s been an oddly quiet one in terms of AI volume, but the stories themselves were big.

The good news is it gives us a chance to go a bit deeper than usual.

This week has seen a big shift in regulation (with the US and EU revealing very different plans), Anthropic changes how it limits Claude use, and OpenAI launches a new study mode for students.

Our Week in AI

When AI Works (and When It Doesn’t)

We talk a lot about vibe coding - that thing where you ask an AI to build something without knowing what you really want. It rarely ends well.

But when you’ve done the planning - when you’ve got a clear idea, a bit of headspace, and time to test - AI can be genuinely brilliant.

This week Claude helped me build a document uploader I’m actually proud of. It’s simple, reliable, and has already saved our team 100s of hours of manual work.

Also:

ChatGPT helped me decode a confusing knitting pattern (and save the arms of my cardigan)

Used AI to extract filenames from a screenshot (tiny, but saved time)

But it did let me down. It could not:

Find the show the Returned. Shocking that it didn’t get it from “show about people disappearing and coming back. Includes a school bus. And twins were only one had aged” (a lesson in prompting and not using it like a search engine)

Write a LinkedIn poll - it really struggled with the less than 30 characters per option (and the sarcastic tone I was going for)

Create a really specific image for some content (we turned to our usual Excalidraw+)

Some wins, some losses — but a nice reminder that these tools are most useful when you meet them halfway.

AI New Releases

OpenAI releases ChatGPT study mode - flashcards, memory games, and spaced repetition are now built in. We’ve had a quick play around with some AI learning for the workplace, and we’re not super impressed. It didn’t feel better than normal ChatGPT (actually worse, and didn’t seem to know anything about me). It is aimed at students with a curriculum, so maybe I’m not the target audience.

ChatGPT Study Mode

Microsoft launches Copilot mode in Edge - an “always there” side panel to ask questions while you browse.

Anthropic blog on how their own teams use Claude Code - short post but interesting example of how they’re using it for debugging and test writing.

AI News

Regulation - A Gap is Growing Across the Water

I’m going to give some takeaways, so you don’t have to read these enormous docs.

The US has released their America’s AI Action Plan. What stuck out to us:

Training is framed as a labour pipeline - new programmes will support the growth of data centres and chip factories, but there's little mention of broader AI literacy or upskilling existing workforces in safe and thoughtful use.

Regulation is being rolled back - federal agencies are being told to use more AI, faster. States with stronger guardrails could lose federal funding, raising questions about accountability and standards.

The plan views AI as a strategic asset - national security and economic competition (particularly with China) are driving decisions. That might accelerate innovation, but it risks sidelining public interest concerns.

The EU on the other hand is going hard on regulation with the EU AI Act:

Training is treated as a legal question, not a learning one - providers must document and disclose what content their models were trained on, mainly to address copyright. There’s little here on AI skills for the wider workforce or how people can safely and confidently use these tools.

Regulation is being built in early - the guidance is part of a structured rollout under the EU AI Act. While voluntary now, the Code of Practice signals the direction of future enforcement: more transparency, more rights protections, and stricter requirements for models seen as high‑risk.

AI is framed as a system to govern, not a race to win - the emphasis is on safeguarding creativity, protecting fundamental rights, and building trust. There’s less urgency, but more clarity - especially for those working in regulated sectors or dealing with IP-heavy content.

tldr;

For UK businesses, this means navigating two very different pressures - EU-style compliance grounded in transparency and rights, and US-led innovation that prioritises speed, scale and strategic dominance.

Anthropic Tries to Save Some Money (and the Planet)

Anthropic’s email to subscribers

In a slightly controversial move, Anthropic have announced weekly limits (as well as their current limits every 5 hours). They claim this is because some users are using it nearly 24/7. If you use Claude, there have been increased errors and outages over the last few weeks so this doesn’t come as a surprise (and might be a good thing).

A good lesson not to become too dependent on these tools, especially when the companies are losing money, and changing pricing and plans constantly.

Other AI News

Google Search is now showing AI-generated overviews in the UK - opt-in for now, but a clear sign of where things are heading. Note: this is different from what we currently see - a summary from one specific source. These new overviews are written by Google’s AI, drawing from several sources. The impact on SEO is not yet clear.

Anthropic has a lead investor for their $5B round - another huge raise coming soon.

Apple loses another AI researcher to Meta - four have now moved across to Meta’s superintelligence team.

The Pope on AI and creativity - a mass for content creators with a clear call that AI should support (not replace) human creativity.

Not Quite News, But Worth a Read (or Listen or Watch)

Sam Altman is afraid of GPT-5 and wants your chats to have legal protection - a very deliberate line in a tech bro podcast about being afraid of how powerful GPT-5 is. Take from that what you will. He also mentioned chats with ChatGPT not having legal protection, but as people use it like a therapist, he thinks they should.

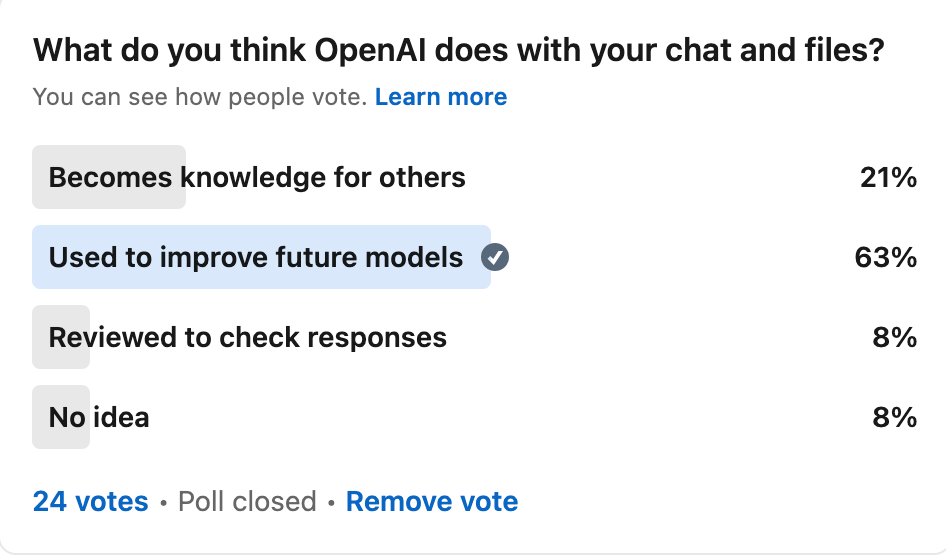

LinkedIn AI Poll

Last week we asked about what people think happens to your data when you use an AI tool.

Most people said it is used to improve future models.

Vote in this week’s poll - please!

So this week we want to know what you think training a model actually means - what happens when OpenAI (or some other company) use your data to train a model?

Final Thoughts

As always we hope this was helpful!

Feel free to share this with anyone who might find it useful.

Next week, hopefully everyone stays on summer holidays in terms of AI news.

Laura

Always learning